It’s Just Adding One Word at a Time

That ChatGPT can automatically generate something that reads even superficially like human-written text is remarkable, and unexpected. But how does it do it? And why does it work? My purpose here is to give a rough outline of what’s going on inside ChatGPT—and then to explore why it is that it can do so well in producing what we might consider to be meaningful text. I should say at the outset that I’m going to focus on the big picture of what’s going on—and while I’ll mention some engineering details, I won’t get deeply into them. (And the essence of what I’ll say applies just as well to other current “large language models” [LLMs] as to ChatGPT.)

The first thing to explain is that what ChatGPT is always fundamentally trying to do is to produce a “reasonable continuation” of whatever text it’s got so far, where by “reasonable” we mean “what one might expect someone to write after seeing what people have written on billions of webpages, etc.”

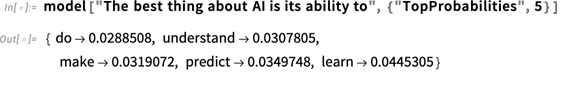

So let’s say we’ve got the text “The best thing about AI is its ability to”. Imagine scanning billions of pages of human-written text (say on the web and in digitized books) and finding all instances of this text—then seeing what word comes next what fraction of the time. ChatGPT effectively does something like this, except that (as I’ll explain) it doesn’t look at literal text; it looks for things that in a certain sense “match in meaning”. But the end result is that it produces a ranked list of words that might follow, together with “probabilities”:

And the remarkable thing is that when ChatGPT does something like write an essay what it’s essentially doing is just asking over and over again “given the text so far, what should the next word be?”—and each time adding a word. (More precisely, as I’ll explain, it’s adding a “token”, which could be just a part of a word, which is why it can sometimes “make up new words”.)

But, OK, at each step it gets a list of words with probabilities. But which one should it actually pick to add to the essay (or whatever) that it’s writing? One might think it should be the “highest-ranked” word (i.e. the one to which the highest “probability” was assigned). But this is where a bit of voodoo begins to creep in. Because for some reason—that maybe one day we’ll have a scientific-style understanding of—if we always pick the highest-ranked word, we’ll typically get a very “flat” essay, that never seems to “show any creativity” (and even sometimes repeats word for word). But if sometimes (at random) we pick lower-ranked words, we get a “more interesting” essay.

The fact that there’s randomness here means that if we use the same prompt multiple times, we’re likely to get different essays each time. And, in keeping with the idea of voodoo, there’s a particular so-called “temperature” parameter that determines how often lower-ranked words will be used, and for essay generation, it turns out that a “temperature” of 0.8 seems best. (It’s worth emphasizing that there’s no “theory” being used here; it’s just a matter of what’s been found to work in practice. And for example the concept of “temperature” is there because exponential distributions familiar from statistical physics happen to be being used, but there’s no “physical” connection—at least so far as we know.)

Before we go on I should explain that for purposes of exposition I’m mostly not going to use the full system that’s in ChatGPT; instead I’ll usually work with a simpler GPT-2 system, which has the nice feature that it’s small enough to be able to run on a standard desktop computer. And so for essentially everything I show I’ll be able to include explicit Wolfram Language code that you can immediately run on your computer. (Click any picture here to copy the code behind it.)

For example, here’s how to get the table of probabilities above. First, we have to retrieve the underlying “language model” neural net:

Later on, we’ll look inside this neural net, and talk about how it works. But for now we can just apply this “net model” as a black box to our text so far, and ask for the top 5 words by probability that the model says should follow:

This takes that result and makes it into an explicit formatted “dataset”:

This takes that result and makes it into an explicit formatted “dataset”:

Here’s what happens if one repeatedly “applies the model”—at each step adding the word that has the top probability (specified in this code as the “decision” from the model):

What happens if one goes on longer? In this (“zero temperature”) case what comes out soon gets rather confused and repetitive:

But what if instead of always picking the “top” word one sometimes randomly picks “non-top” words (with the “randomness” corresponding to “temperature” 0.8)? Again one can build up text:

To continue reading this article, click here.

You must be logged in to post a comment.

A great game to have on hand for those times when you’re feeling down, worn out, or just need a little inspiration to keep going is flaggle.

try free chatgpt online at gptdeutsch.de

In the rapidly evolving landscape of today’s work environment, technology plays a pivotal role in enhancing productivity and communication at https://chatgptdemo.ai/. One such revolutionary tool making waves in the professional realm is ChatGPT Free, a powerful language model developed by OpenAI. This article explores the myriad benefits that ChatGPT Free brings to the table, transforming the way we work and communicate.

It was a good info. Panorama Charter

Don’t miss out on our exclusive deal! Grab the Jack Ttc X Nike Jacket now at unbeatable prices and enjoy free shipping! This stylish jacket combines the best of Jack Ttc’s design with the quality of Nike. With its unique blend of comfort and fashion, it’s a must-have for any wardrobe. Hurry, limited stock available! Shop today and upgrade your style game.

I’m overjoyed that you love me. Really got to me, this one did! papa’s freezeria game

The forums you share are really helpful to me, I will follow your posts regularly. For more information about me, go here: quordle

Thanks for sharing such a valuable post.

mens gothic pants

very good

fnf mods is a fascinating website devoted to Friday Night Funkin’ game series. In this series of games, players are challenged with loads of wonderful music, rhythms and sounds that keep players engaged without getting bored.

You can use the chat español gratis tool without logging in.