Originally published in Forbes

Why in the world would the decades-old industry of machine learning need a completely new paradigm?

Imagine you’re developing a rocket. If you don’t stress test it—in its intended usage (via simulations, wind tunnels, etc.)—then its launch will be a shot in the dark, if not entirely scrubbed by sensible decision makers.

That’s the status of most enterprise ML projects, aka predictive AI. The industry hasn’t matured to the point where such pre-launch stress testing is commonplace. As a result, most predictive AI deployments are scrubbed. Its great potential is clear and many projects achieve it—but many more don’t.

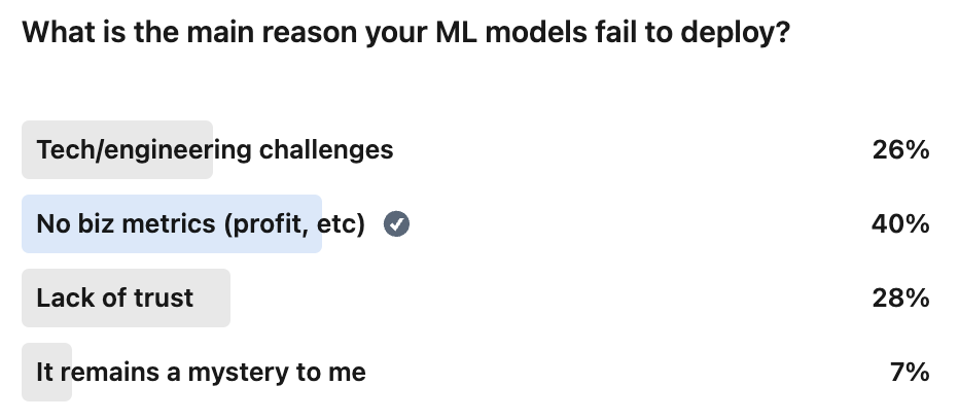

Predictive AI’s dismal track record is readily avoidable. The first and most fundamental remedy would be to routinely establish its business performance pre-launch. Check out my LinkedIn poll from a few months ago:

The results of a poll held on LinkedIn.

Per the winner—“no biz metrics” (40%)—seasoned predictive AI professionals are crying out for business metrics such as profit and savings. That is, moving from the standard practice of evaluating predictive models to valuating them, aka ML valuation.

Perhaps the reason such metrics are still not the norm is that calculating them means opening a can of worms: You’ve got to involve more of the business context.

Sticking with technical metrics alone may feel safer for many data scientists. Doing so keeps them in their comfort zone, working in more abstract terms without the need to involve messy realities introduced by business operationalization.

But technical metrics are arcane, disconnected from business priorities. Sticking with them in lieu of business metrics undoubtedly contributes to the survey’s runner-up, “Lack of trust” (28%). Technical metrics tell you the degree to which an ML model predicts better than guessing. Without a magic crystal ball, predicting better than guessing is the best we can hope for—and, indeed, knowing we’ve achieved that often signals that a model is potentially valuable. But it does not tell you how valuable, in concrete business terms like profit or savings.

So, if calculating the business value must involve knotty business realities, then there’s only one possible conclusion: Opening that can of worms must be the new discipline.

Predictive AI is embarrassingly horizontal. It targets overdue debtors, promising donors, abandoned shopping carts, defecting customers, fraudulent transactions, malfunctioning equipment and no-show healthcare patients. But, for each of these wins, each use case also introduces costs: The price you pay each time it mistargets—that is, the false positives. The technical metrics that most predictive AI projects stick with do not quantify the costs of these missteps. Since those metrics fail to place a dollar amount on each win and loss, the business value remains a vague supposition.

Quantifying business value may be rare, but it’s just as doable as it is necessary. It’s not the rocket science part—it’s only following through with the right arithmetic to surmise how well your rocket will soar.

Data scientists must serve their customers, the business stakeholders, an accessible explanation of the deployment options an ML model provides. And they must do so in terms that speak to business considerations, conveying the potential operational value using straightforward business measures like profit and savings.

When a predictive AI team takes the strangely rare yet critical step of calculating business metrics—aka ML valuation—and delivers visibility into the projected value for each deployment option, stakeholders find this visibility crucially enlightening and a long time coming. Don’t settle for less from your data scientists.

About the author

Eric Siegel is a leading consultant and former Columbia University professor who helps companies deploy machine learning. He is the founder of the long-running Machine Learning Week conference series, the instructor of the acclaimed online course “Machine Learning Leadership and Practice – End-to-End Mastery,” executive editor of The Machine Learning Times and a frequent keynote speaker. He wrote the bestselling Predictive Analytics: The Power to Predict Who Will Click, Buy, Lie, or Die, which has been used in courses at hundreds of universities, as well as The AI Playbook: Mastering the Rare Art of Machine Learning Deployment. Eric’s interdisciplinary work bridges the stubborn technology/business gap. At Columbia, he won the Distinguished Faculty award when teaching the graduate computer science courses in ML and AI. Later, he served as a business school professor at UVA Darden. Eric also publishes op-eds on analytics and social justice. You can follow him on LinkedIn.

The Machine Learning Times © 2026 • 1221 State Street • Suite 12, 91940 •

Santa Barbara, CA 93190

Produced by: Rising Media & Prediction Impact

Pingback: Predictive AI: Worthless Without Real-World Value